Table of Contents

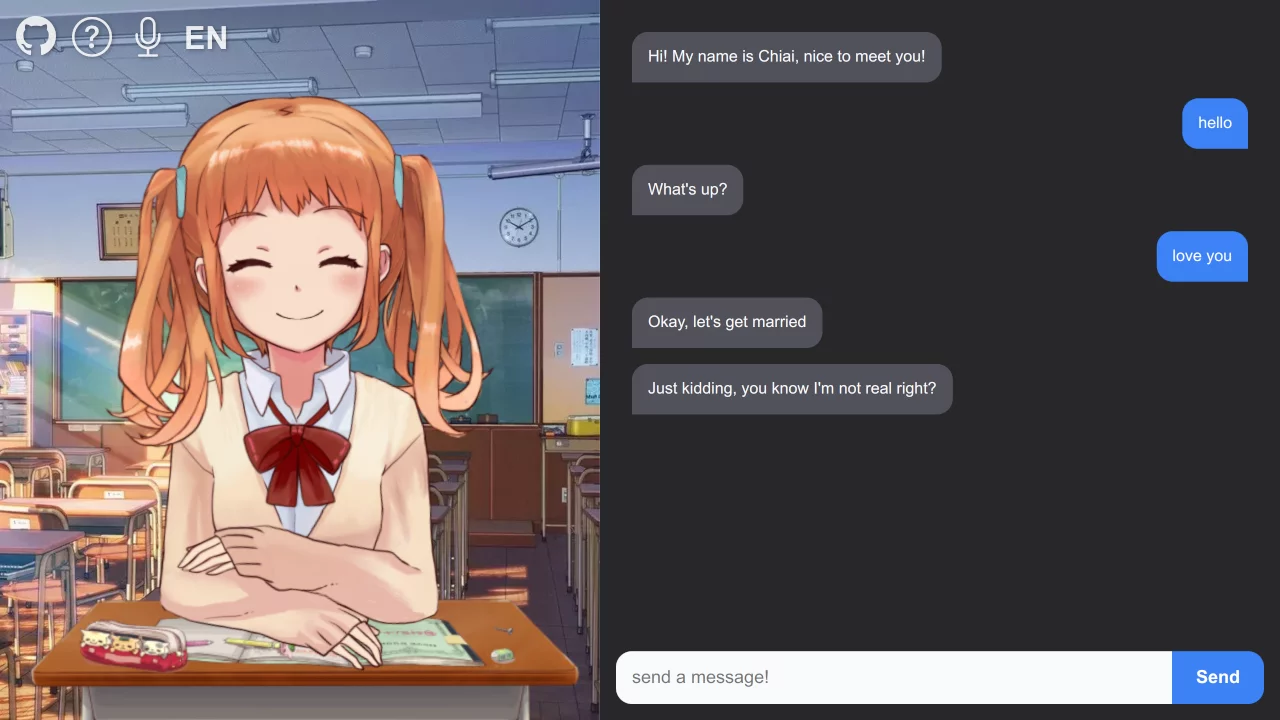

Hello, I’m Chiai!

Chiai (千愛) is your average teenage girl.

Her name comes from my name + AI. Interestingly, it also means “A thousand love” in Japanese, just like how I love developing her. Her model is from Live2D Sample Model Collection, anything else is from the internet, and I do not claim any assets here, credits are to the rightful owner.

About this project

I made this for Introduction to Computer Science, my task was just to make a video about the AI I wanted to make. Here’s the point: I can’t make no videos, so I decided to make the AI into reality instead. And to be honest this is the most elaborate project I have ever worked on.

Shoutout to nlp.js for Natural Language Processing, pixi-live2d-display for the Live2D display, and Vite for bundling (and TypeScript for the type errors!)

At first, I made this in plain HTML and JavaScript, but I realized that it’s too hard to maintain and requires too much hacks that it’d be hard to understand by anyone. If you want the old outdated version, you can go to static branch, or visit the demo.

Features

The main focus of this project is the chatting, anything else is not as important. Here’s a list of what you can and may do:

Chat in multiple language

Currently, it supports English, Bahasa Indonesia, and Japanese. If you start to chat in another language, the AI should automatically guess the language. This is possible by providing multiple data for the AI.

Note Japanese is currently incomplete, it just exist.

Live2D

This is the most visual sugar of all time, it deserves no purpose except to drain your data to download a megabyte of Live2D assets. But in defense, Live2D is connected to NLP so that the model could react to certain message intents.

Speech Recognition

This feature will only work in updated Google Chrome browsers. You may use the microphone button on top-left to use your voice. The input then will be transcribed to the message box and will be automatically sent once you stopped.

Warning This feature is very buggy and the most prone to errors, never expect it to work!

TODO

I’ll put this here because I know well I won’t do them. Things that can be added/improved and the reason why I didn’t do it:

| What | Why |

|---|---|

| More message intents | Takes so much time to make the data all by myself |

| Expressions | Since how NLP and Live2D interacts, it’s hard to integrate it. Maybe with nlp.js sentient analysis? |

| Smarter AI | It’s possible, but nlp.js documentation just made it so hard I can’t find anything that I want |

| Better web UI/UX | low priority as long as it works |

| Progressive web-app (offline) | lowest priority nobody would want to use this offline lmao |